Use Cases

OpenAI Agents SDK

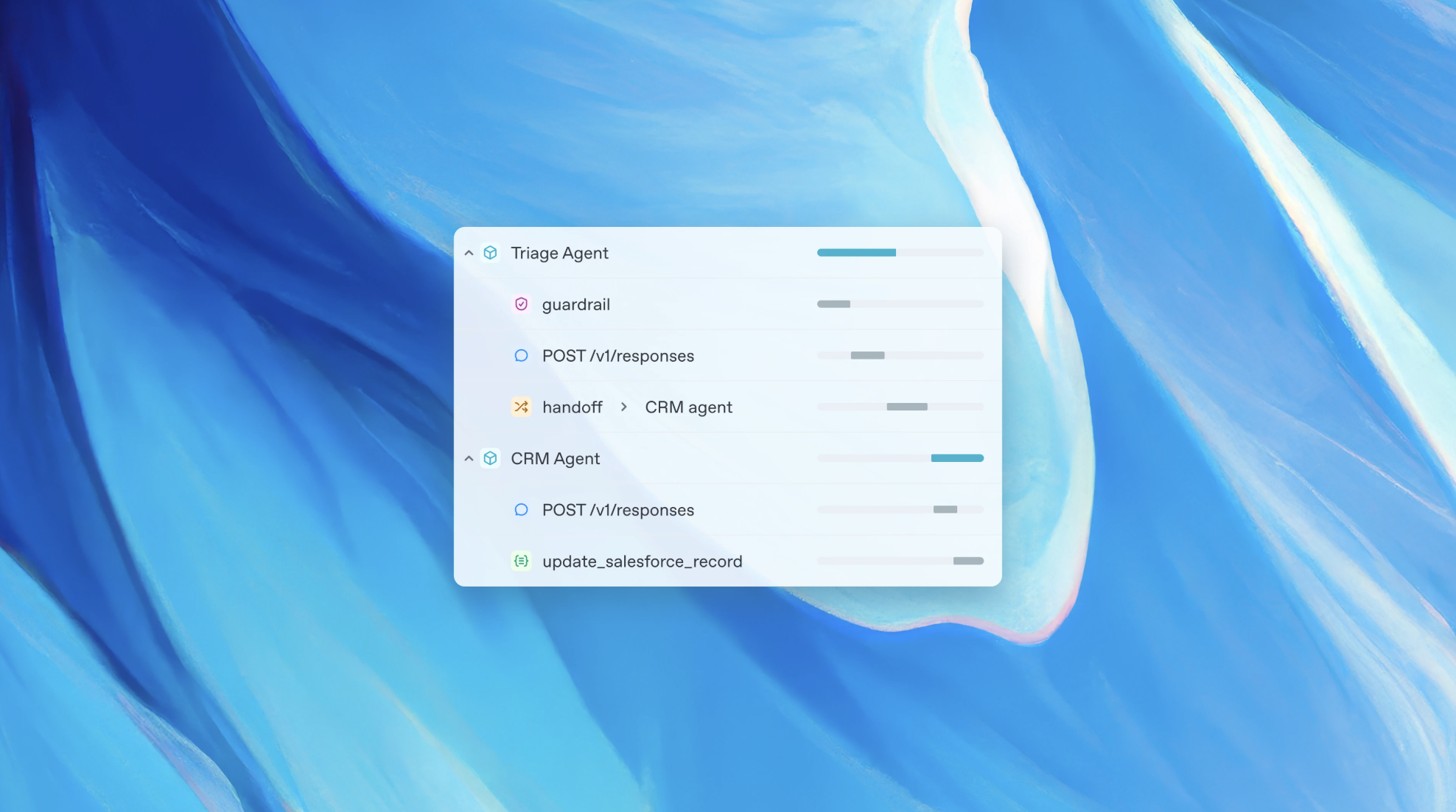

Building agents with the OpenAI Agents SDK and Pica MCP

This document demonstrates how to integrate the Pica MCP server with OpenAI’s Agents SDK.

This document demonstrates how to integrate the Pica MCP server with OpenAI’s Agents SDK.

GitHub Repository

@picahq/awesome-pica

Check out our GitHub repository to explore the code, contribute, or raise issues.